We get to go to a lot of conferences. And we’re always amazed at how many vendors and commentators stand up at events and trade shows and say things like, “The objective of analytics is to discover new insight about the business”.

Let us be very clear. If the only thing that your analytic project delivers is insight, it has almost certainly failed. Your objective must not be merely to discover something that you didn’t know, or to quantify something that you thought you did — rather it must be to use that insight to change the way you do business. If your model never leaves the lab, there can never be any return on your investment in data and analytics.

“Analytics must aim to deliver insight to change the way you do business”

The goal of machine learning is often — though not always — to train a model on historical, labelled data (i.e., data for which the outcome is known) in order to predict the value of some quantity on the basis of a new data item for which the target value or classification is unknown. We might, for example, want to predict the lifetime value of customer XYZ, or to predict whether a transaction is fraudulent or not.

Before we can use an analytic model to change the way we do business, it has to pass two tests. Firstly, it must be sufficiently accurate. Secondly, we must be able to deploy it so that it can make recommendations and predictions on the basis of data that are available to us — and sufficiently quickly that we are able to do something about them.

Some obvious questions arise from all of this. How do we know if our model is “good enough” to base business decisions on? And since we could create many different models of the same reality — of arbitrary complexity — how do we know when to stop our modelling efforts? When do we have the most bang we are ever going to get, so that we should stop throwing more bucks at our model?

So far, so abstract. Let’s try and make this discussion a bit more concrete by looking at some accuracy metrics for a real-world model that one of us actually developed for a customer.

A working example of machine learning

The business objective in this particular case was to avoid delays and cancellations of rail services by predicting train failures up to 36 hours before they occurred. To do this, we trained a machine learning model on the millions of data points generated by the thousands of sensors that instrument the trains to identify the characteristic signatures that had preceded historical failure events.

We built our model using a training data set of historical observations — sensor data from trains that we labelled with outcomes extracted from engineers’ reports and operations logs. For the historical data, we know whether the train failed — or whether it did not.

In fact, we didn’t use all of our labelled historical data to train our model. Rather, we reserved some of that data and ring-fenced it in a so-called “holdout” data set. That means that we have a set of data unseen to the model that we can use to test the accuracy of our predictions and to make sure that our model does not “over-fit” the data.

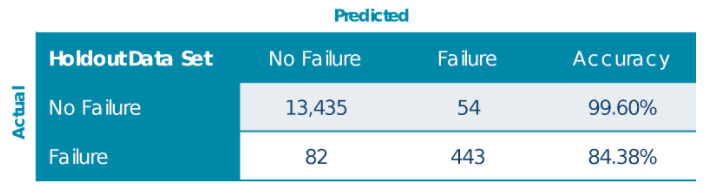

The table shown above is a “confusion matrix” resulting from the application of the model built from the training data set to the holdout data set. It enables us to understand what we predicted would happen versus what actually did happen.

You can see that our model is 84 percent accurate in predicting failures — that is, we correctly predicted that a failure would occur where one subsequently did occur within the next 36 hours in 443 out of 525 (82+443) cases. That’s a pretty good accuracy rate for this sort of model — and certainly accurate enough for the model to be useful for our customer.

Just as important as the overall accuracy, however, are the number of so-called type-one errors (false positives) and type-two errors (false negatives). In our case, we incorrectly predict 54 failures where none occur. These errors represent 54 situations where we might potentially have withdrawn a train from service for maintenance it did not need. Equally, there are 82 type-two errors. That means that for every 14,014 (13,435+54+82+443) trips made by our trains, we should anticipate that they will unexpectedly fail on 82 occasions, or 0.6 percent of the time.

Model inaccuracy costs money

Because both false positive and false negative errors incur costs, we have to be very clear what the acceptable tolerance for these kind of errors is. When reviewing the business case for deploying a new model, ensure that these costs have been properly accounted for.

If you are a business leader who works with data scientists, you may encounter lots of different shorthand for these and related constructs. Precision, recall, specificity, accuracy, odds ratio, receiver operating characteristic (ROC), area under the curve (AUC), etc. — all of these are measures of model quality. This is not the place to describe them all in detail — see the Provost and Fawcett book or Salfner, Lenk and Malek’s slightly more academic treatment in the context of predicting software system failures — but be aware that these different measures are associated with different trade-offs that are simultaneously both a trap for the unwary and an opportunity for the unscrupulous. Caveat emptor!

When we have satisfied ourselves that our model is sufficiently accurate, we need to establish whether it can actually be deployed, and — crucially — whether it can be deployed so that the predictions that it makes are actionable. This is the second test that we referred to at the start of this discussion.

In the case of our preventative maintenance model, deployment is relatively simple: As soon as trains return to the depot, data from the train sensors are uploaded and scored by our model. If a failure is predicted, we can establish the probability of the likely failure and the affected components and schedule emergency preventative maintenance, as required. This particular model is able to predict failure of train up to 36 hours in advance — so waiting the three hours until the end of the journey to collect and score the data is no problem. But in other situations — an online application for credit, for example, where we might want to predict the likelihood of default and price the loan accordingly — we might need to be able to collect and score data continuously in order for our model to make predictions that are available sufficiently quickly for them to be actionable without disrupting the way that we do business.

As we explained in a previous episode of this blog, this may mean that we need to construct a very robust data pipeline to support near-real-time data acquisition and scoring — which is why good data engineering is such a necessary and important complement to good data science in getting analytics out of the lab and into the frontlines of your business.