Teradata’s Native Object Store feature, referred to as NOS, enables the VantageCloud’s analytical database engine, with ClearScape Analytics, to access objects in object storage buckets using Amazon’s S3 storage access protocol, as a foreign table with objects stored there in open data formats. These open data formats include, csv, json and parquet formatted files.

The behavior of NOS, not being a file system, is that Vantage doesn’t “mount” it like a file system. There is no inode table in server memory or any caching of metadata in Vantage from NOS. It is a REST endpoint doing primitive operations like PUTs and GETs. Thus, when a read request of a foreign table is performed, it grabs the data via the table as though it is the first time it has ever “seen” the data, each and every time.

Unlike a typically mounted filesystem, where a part of that filesystem is copied and maintained in the server’s memory for fast access to location metadata of files and some data as part of the filesystem. NOS is stateless from a server’s point of view. Even when shared filesystems or parallel filesystem have many clients mounting it, the metadata is shared and maintained across all clients of that filesystem. For example, if one client creates a new file to the shared filesystem, all of the other clients will “see” the updated metadata and know there is a new file available.

NOS does not behave this way. Clients hold no state of the bucket of data. The client can GET information about the condition of the bucket upon request and store it itself, but that state is stale almost immediately if other clients are using the bucket. Clients of the bucket can share data there without having to “tell” any other client what was done to the content of the bucket. For example, if one client writes a new file or object to a folder in a bucket, another client will have to go to that folder to “see” what is there. Likewise, if a client reads an object or file on a NOS bucket, that file could be completely different from the file the client read the last time it read the content of the bucket.

My observation of this characteristic is that I can create table on NOS,

- On top of a bucket

- On a folder in a bucket

- On a file in a folder in a bucket

- On many files in a folder in a bucket

Then read the data using the schema of the table. With this behavior, I can then overwrite the file with a different file with the same filename, and the table will read the new data via the same table schema, albeit with different data.

Similarly, if I add additional files to a folder in a bucket and these files all have the same layout, my table schema will read them all as one source of data for my table.

This behavior has useful benefits as a shared data mechanism.

- I never have to drop the table and create a new table with a changed file.

- I can fill a bucket/folder with files and the table grows by each file without changing anything on the Vantage database.

- I can overwrite an existing file with a new file with the same filename, but with different data, under the covers without dropping the table and recreating it to account for the new file.

This makes data sharing on NOS interesting and easier to manage. It is all possible because NOS is not a mounted filesystem. It is object storage.

Is this behavior a feature or bug? It is absolutely a valuable feature.

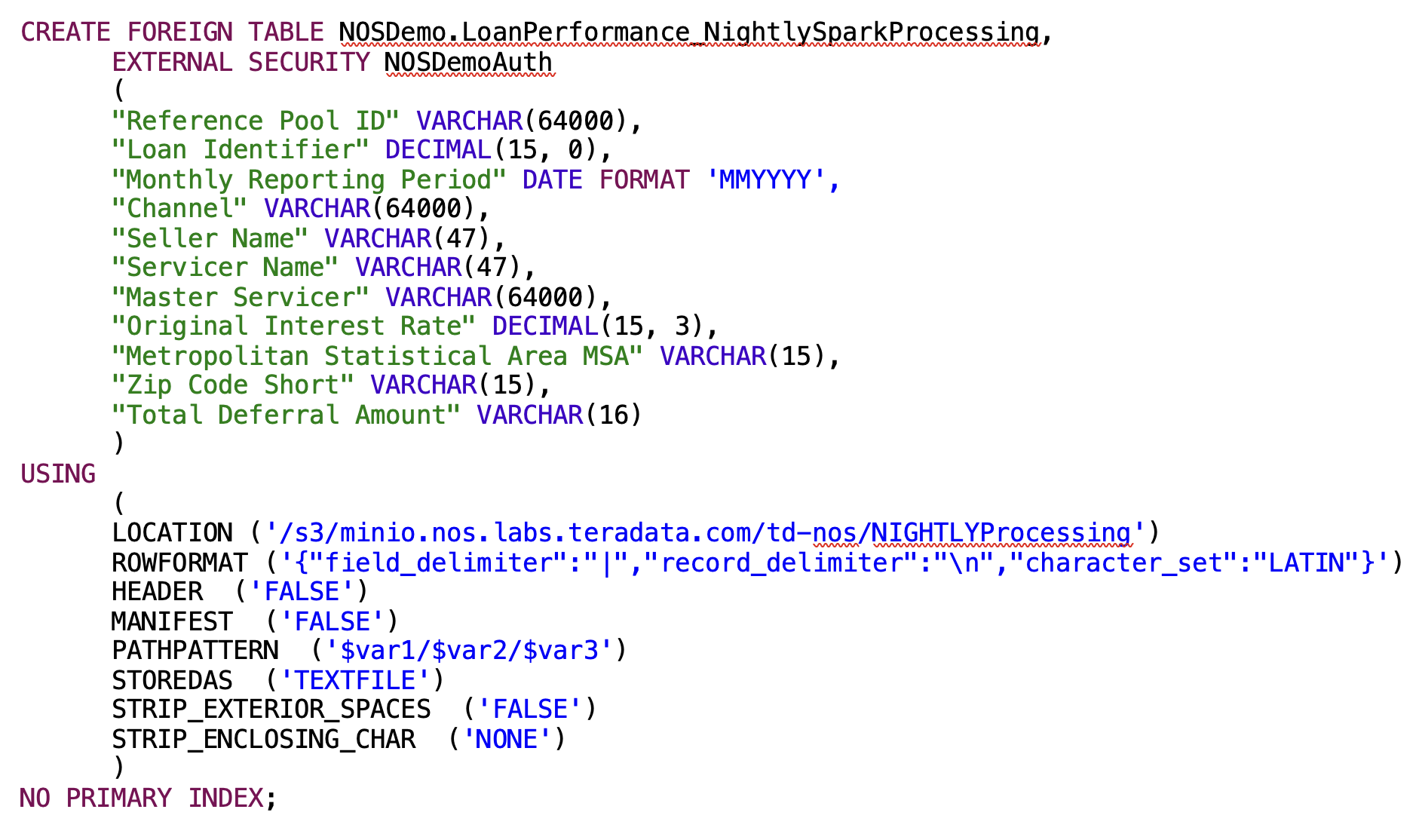

An example of a table schema using the content of a bucket/folder might look like this,

Where the bucket/folder is

Where the bucket/folder is

Notice that I did not specify the file name in the folder, it is just the folder. Now, any file(s) with any file name(s) that is placed in this folder, assuming it has the correct layout for the table schema, and in this case it is a csv formatted file, will be read when the table is queried. File(s) that don’t fit the table schema will be skipped.

In this case, the file that will be stored in the bucket/folder (NIGHTLYProcessing) is a file named,

SparkResults.csv

In this example, this is the result from an external Spark system that is performing some low-level data processing and stores its results in this bucket/folder using the exact same file name every time, essentially overwriting the previous file that was already there. This file will always contain different results data when the Spark processing is done generating it. Thus, a query of the table will yield different results without dropping and creating a new table each time.

In another example, I could add a timestamp to the Spark result file name to make it unique in the folder like,

SparkResults_2023-01-25_2051_16.055.csv

When this file is stored in the bucket/file, it will not overwrite existing files, it will just be added to bucket/folder. Now when a query is performed on the table, all the files in the folder will be read as a single source of data for the table, previous results, and the new result.

This makes Teradata’s VantageCloud's ClearScape Analytics with NOS as a shared data vehicle, available since version 17.05, a powerful feature for sharing data across an enterprise, whether on-premises, in the cloud or as a hybrid.

One additional capability to consider when working with high performance database analytics. If the object storage, needs to be accessed over Wide Area Network (WAN) with high latency, due to distance, congestion or by design (refer to this previous blog on removing network latency), your analytic experience may be less than optimal due to poor network performance. Leveraging Vcinity’s technology with VantageCloud's NOS feature will make the overall experience seem like all of your data is local.

More detailed information about Teradata’s Vantage Native Object Storage can be found here.